Goal and problem

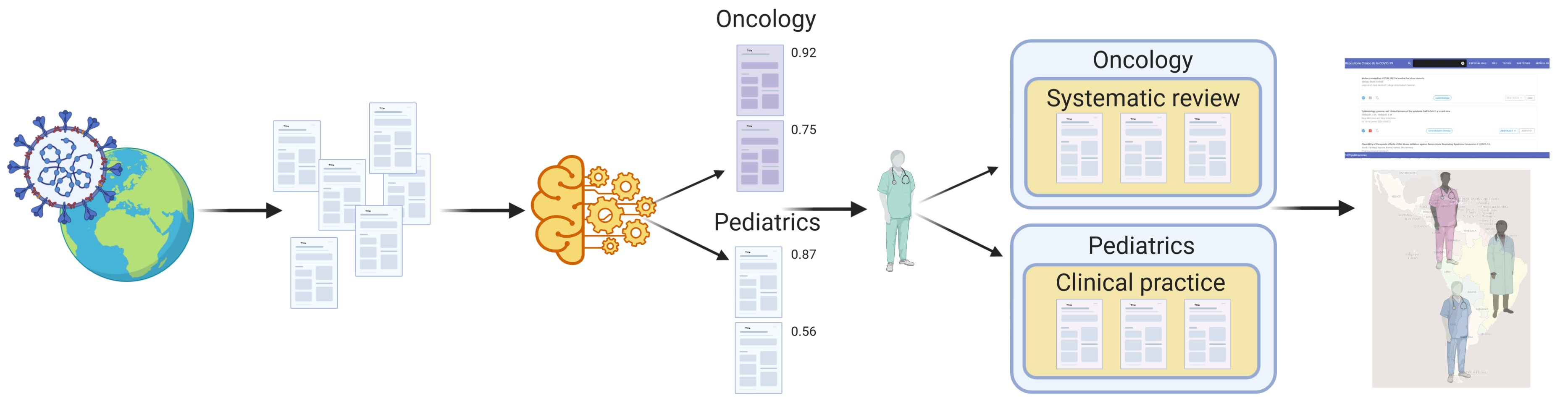

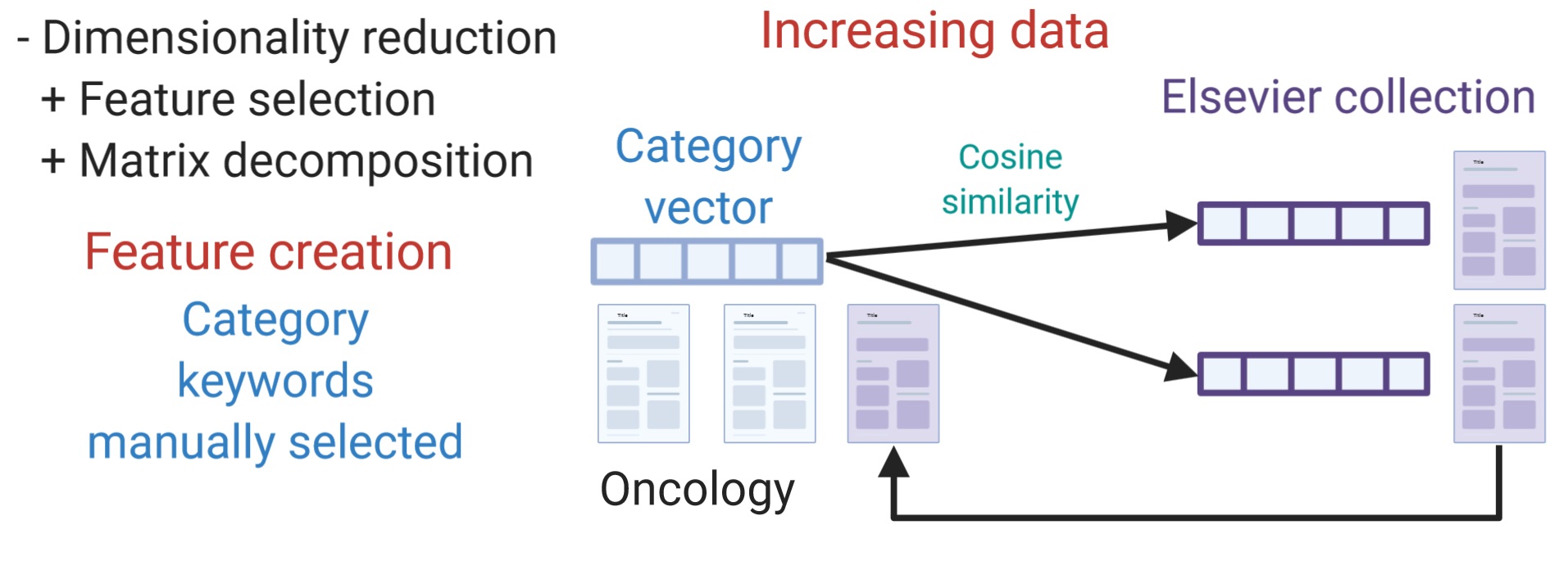

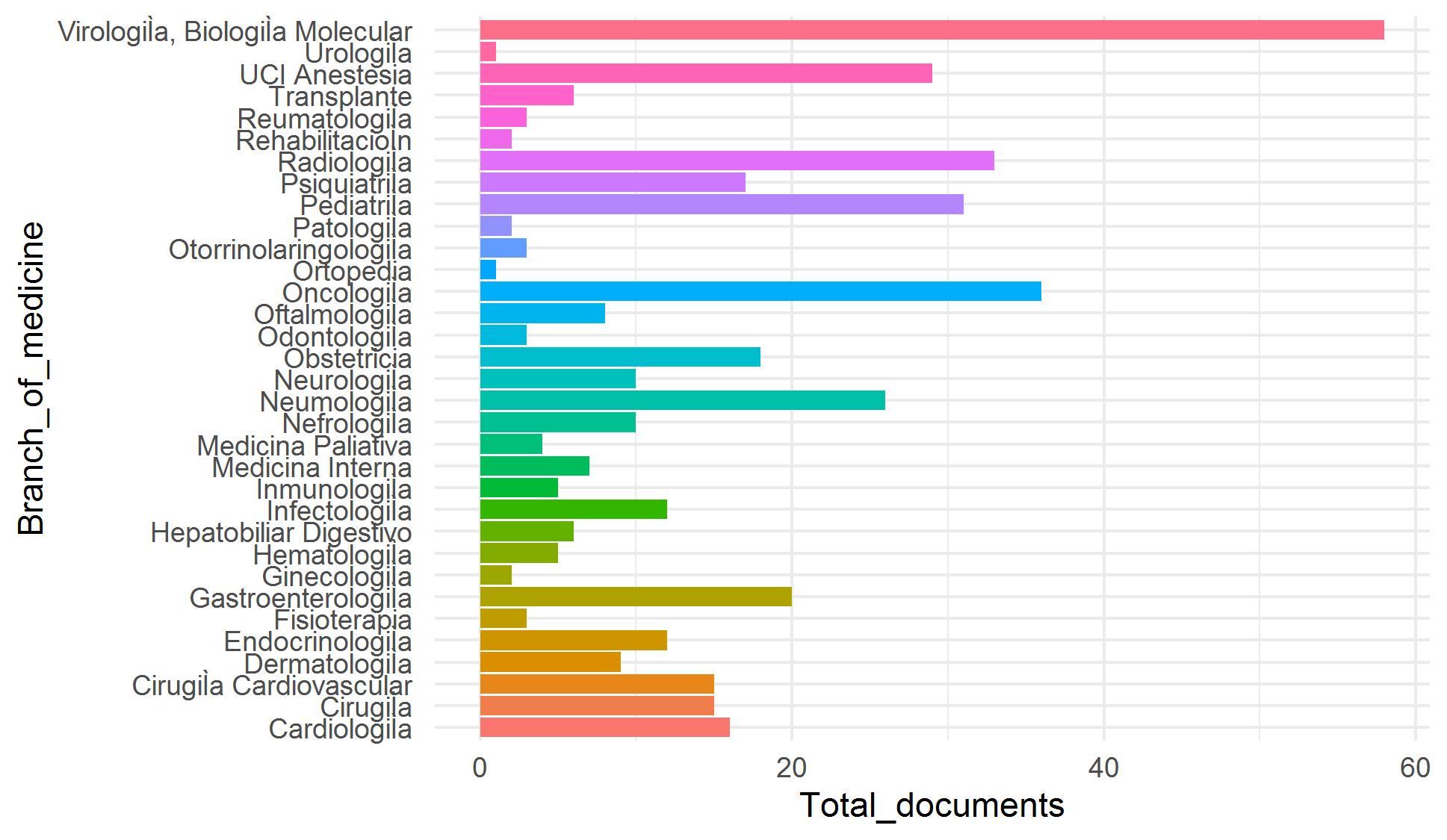

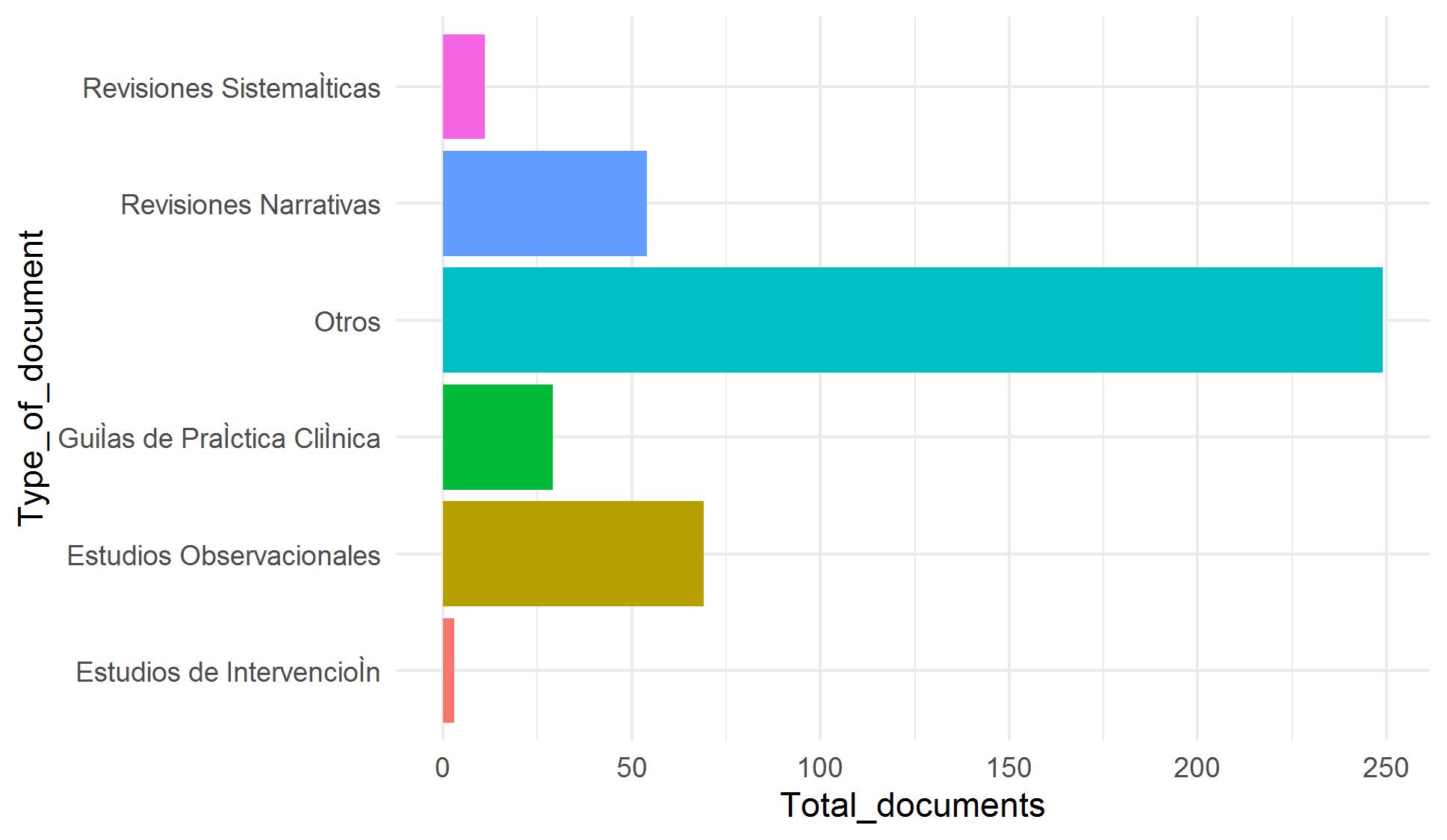

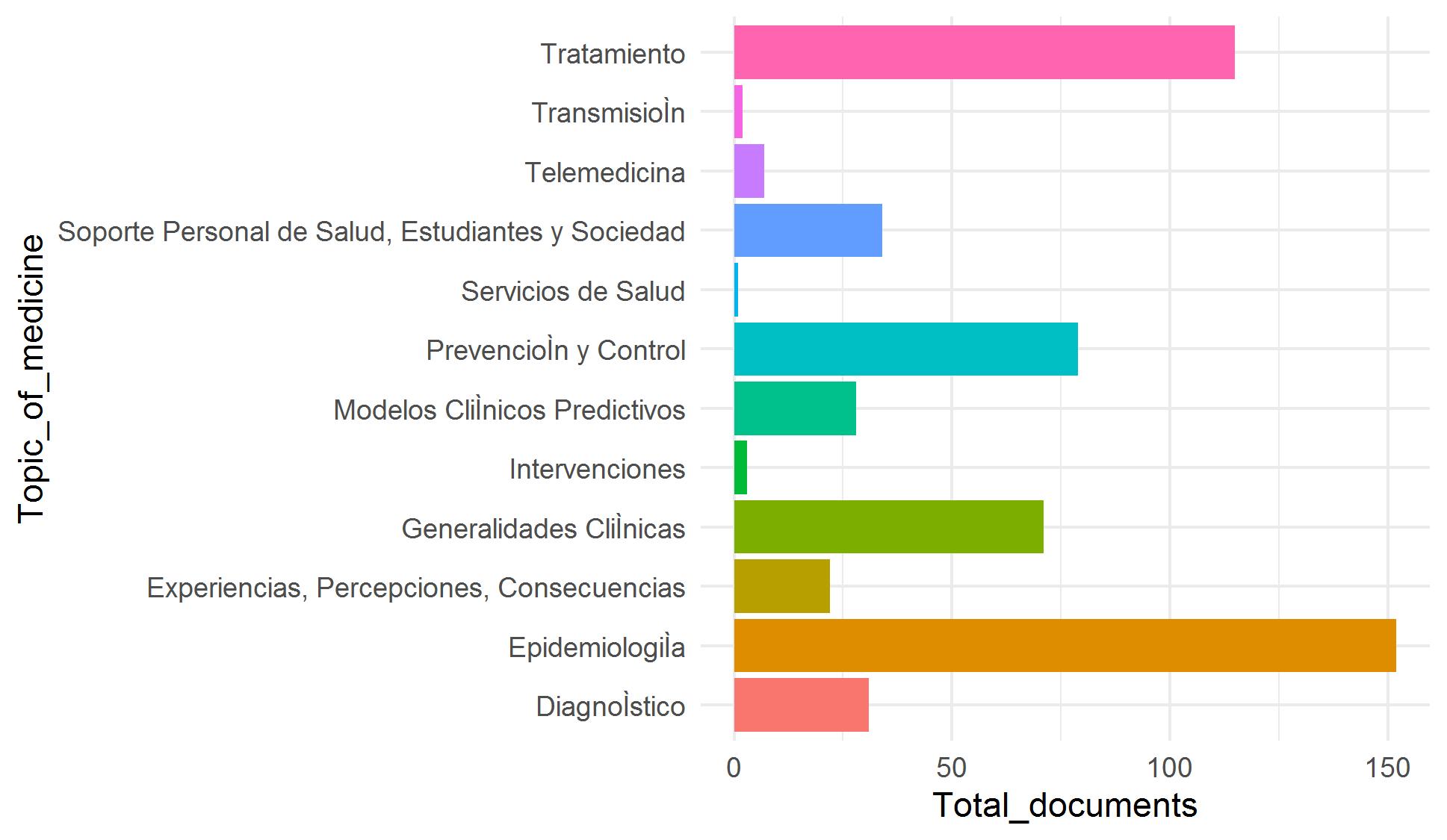

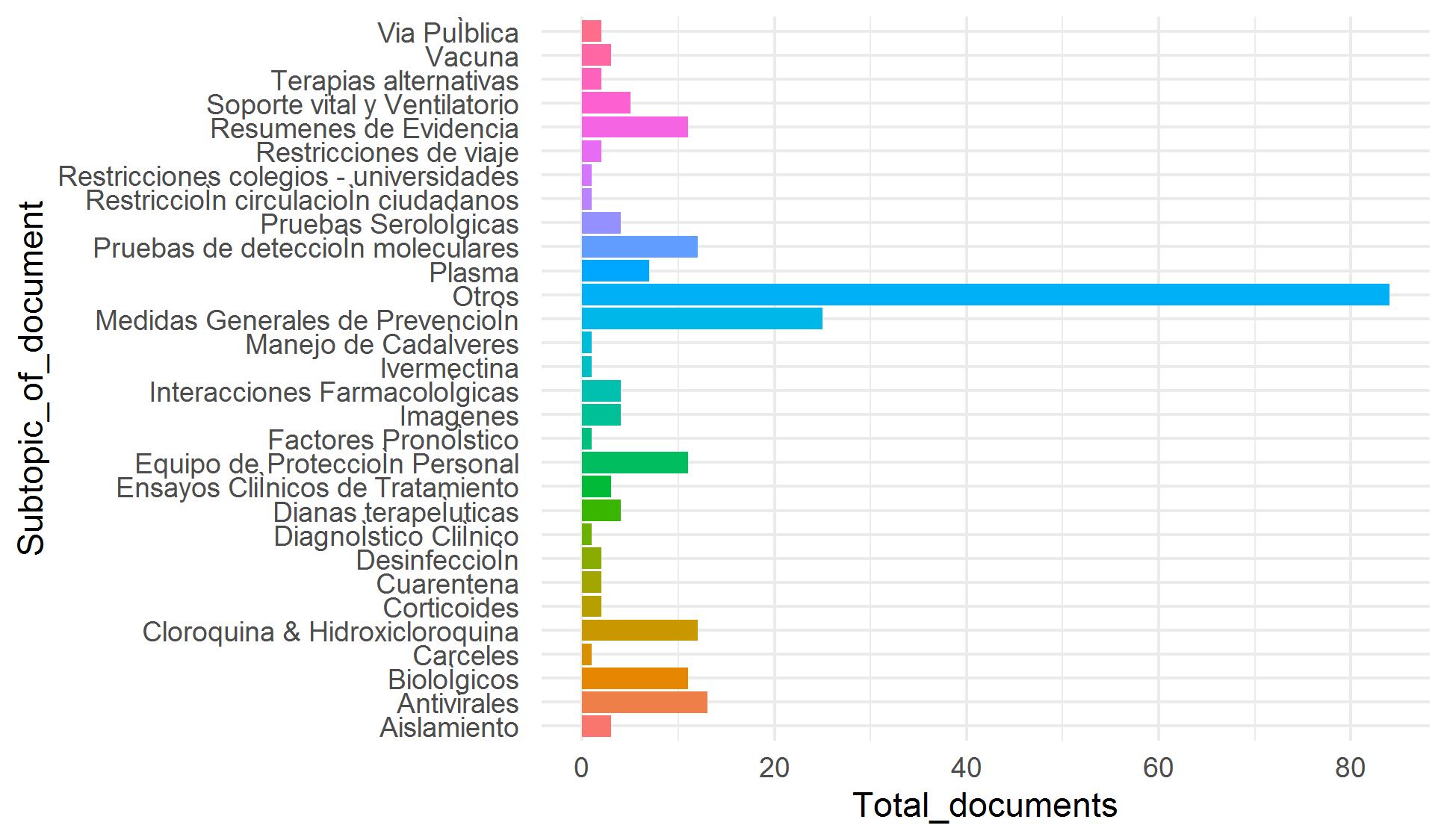

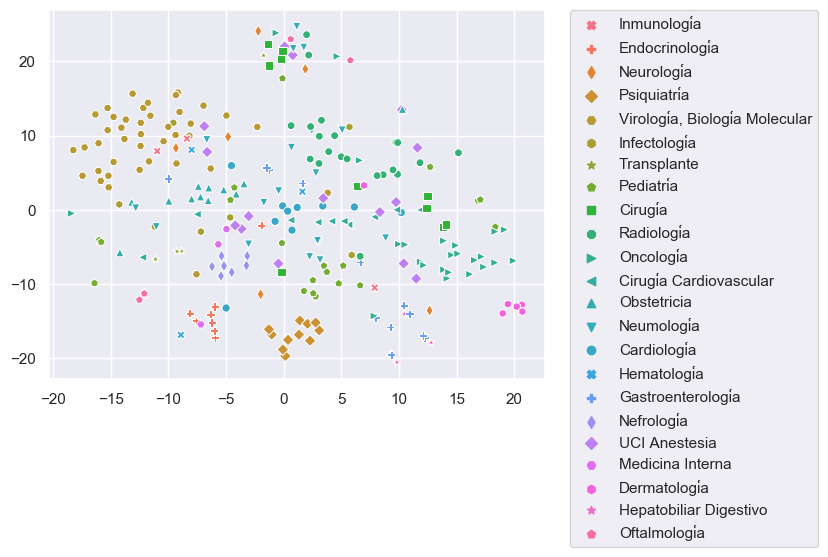

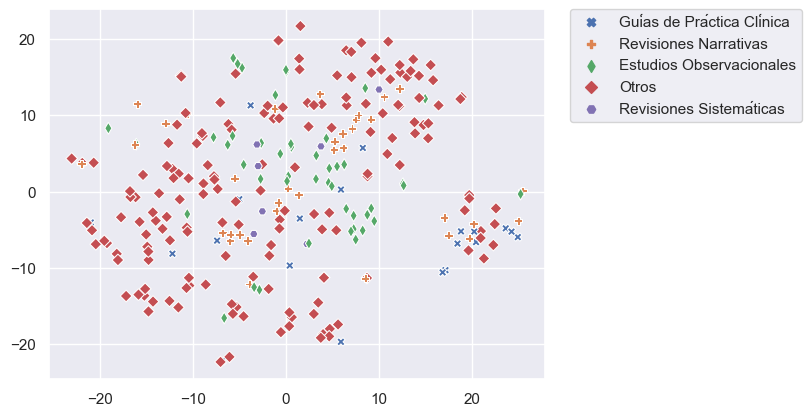

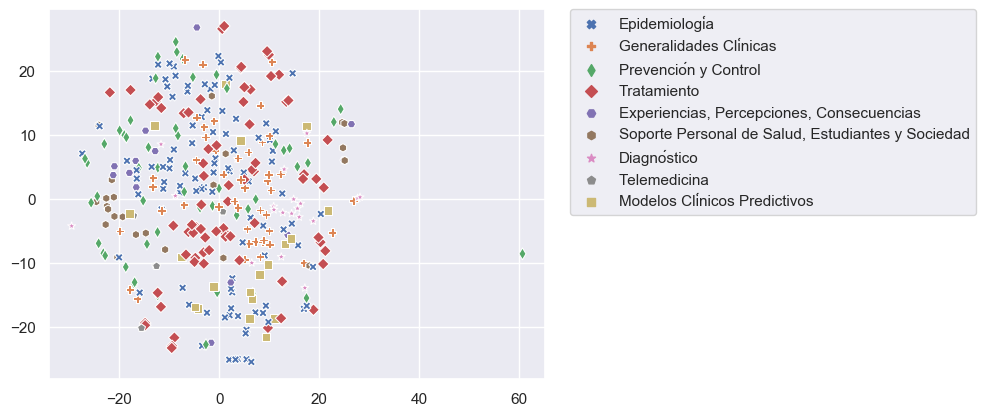

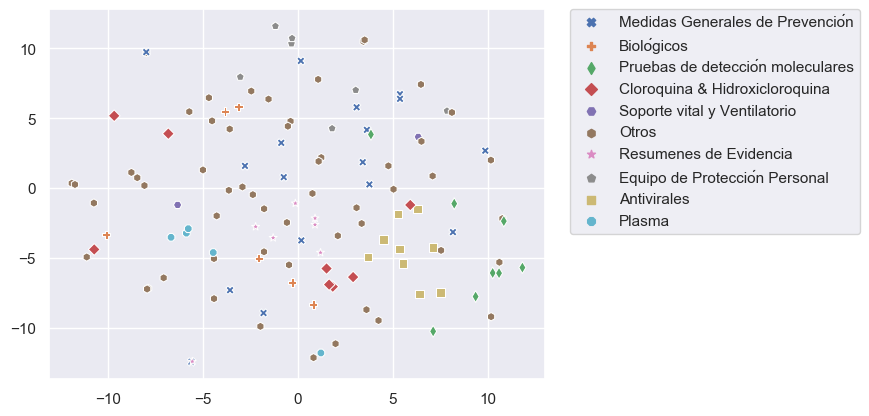

Our goal is to incorporate thousands of articles from the Elsevier and LitCovid collections to our COVID-19 repository. Because manual classification of these article is demanding and time-consuming, we have worked in an assisted classification strategy using supervised learning techniques and using manually classified articles as training data. We faced four problems to achieve this goal: tiny training data and imbalanced classes (categories with few examples) (Figure 1), categories with overlapping data (especially for topic) (Figure 2), and high dimensionality (few rows and a lot of columns).

|

|

|

|

|

|

|

|

|

|

|

|